-

Notifications

You must be signed in to change notification settings - Fork 1.9k

Apply filter pushdown to source rows for the right outer join of matched only case #438

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: master

Are you sure you want to change the base?

Conversation

|

Gentle ping @tdas @zsxwing @jose-torres @brkyvz |

|

Any comments? Gentle ping @tdas @brkyvz @zsxwing @gatorsmile |

|

Any reason why this was not merged or at least reviewed? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

| @@ -214,7 +214,7 @@ case class MergeIntoCommand( | |||

| private def isMatchedOnly: Boolean = notMatchedClauses.isEmpty && matchedClauses.nonEmpty | |||

|

|

|||

| override lazy val metrics = Map[String, SQLMetric]( | |||

| "numSourceRows" -> createMetric(sc, "number of source rows"), | |||

| "numSourceRows" -> createMetric(sc, "number of source rows participated in merge"), | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: s/participated in merge/involved

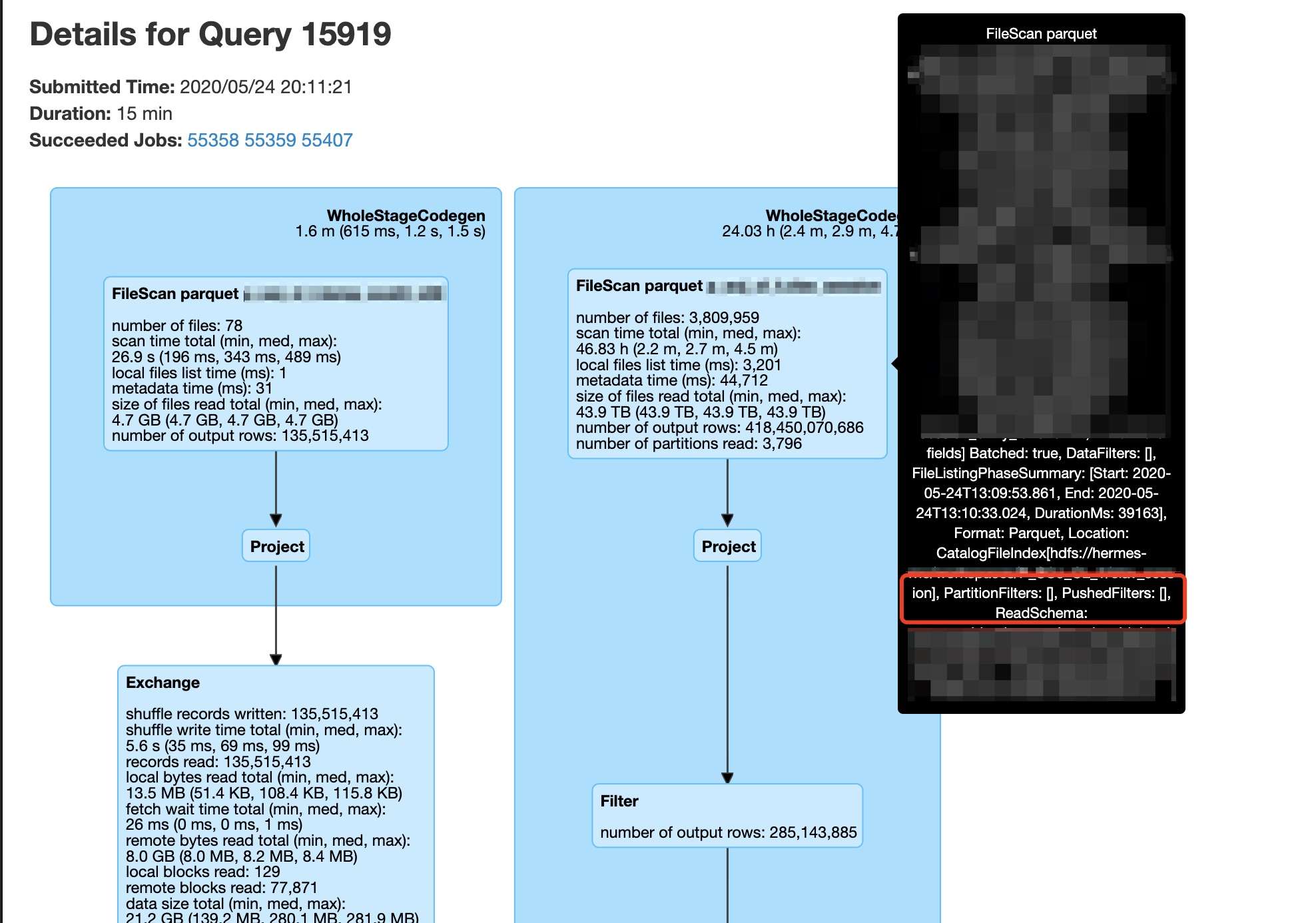

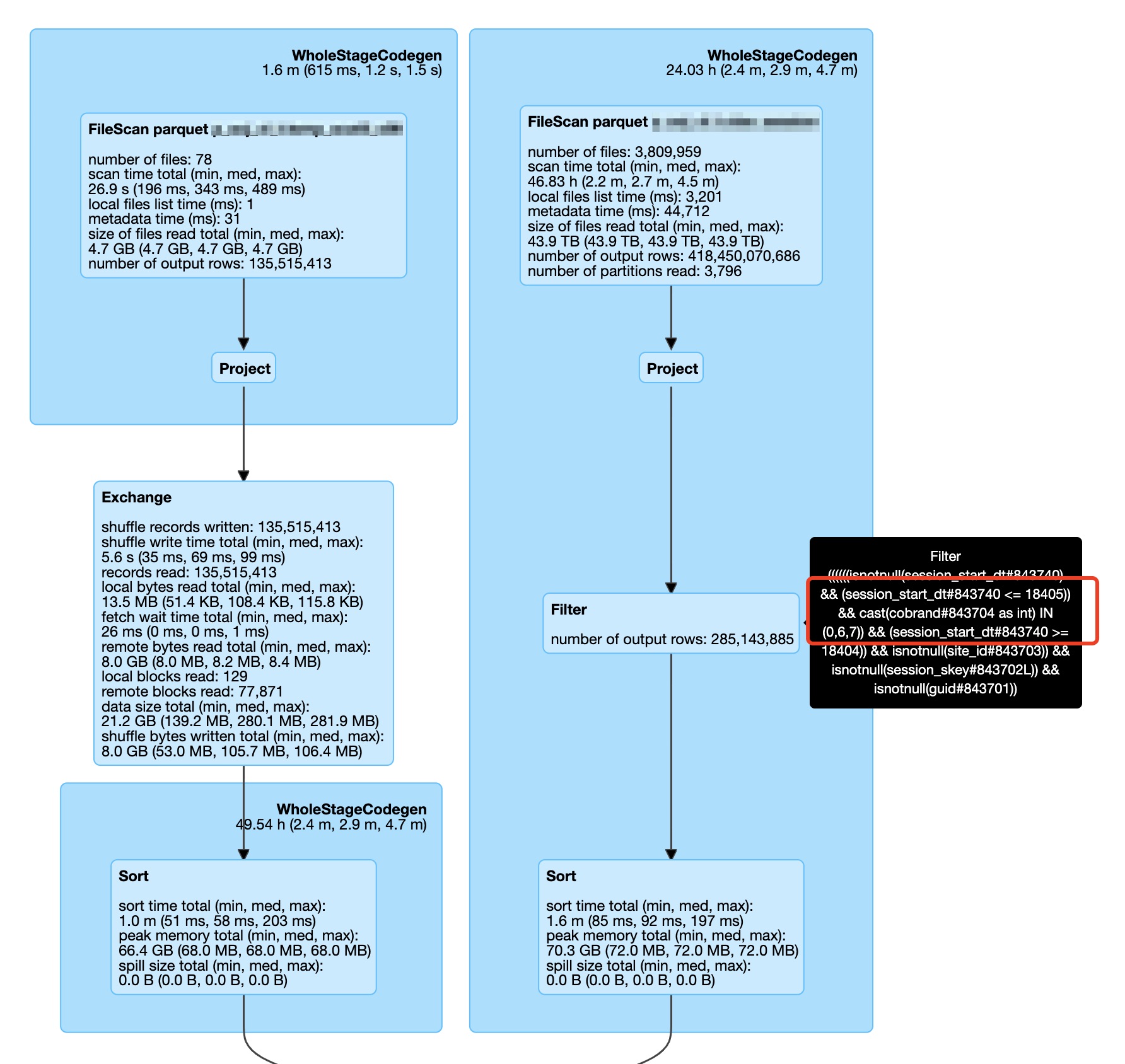

The reason for this optimization is similar to #432.

In matched only case, we use a right outer join between source and target to write the changes.

Due to the non-deterministic UDF

makeMetricUpdateUDF, the predicate pushdown for source rows is not applied. This PR is manually adding a filter beforeProjectionwith the non-deterministic UDF to trigger filter pushdown.Besides the performance improvement by filter pushdown, without this, Spark Driver may easily trigger frequent full GC problem if the source table contains mass files:

(in our inner version, we use target left outer join source instead of source right out join target, so the right side in the below graphs is source table)

From the Class Histogram when Spark Driver full GC, we can see 3.8 million

SerializableFileStatuswhich basically matches the file count in the source table in above graphs. This hold memory could not be GC during the join processing.After applied this optimization, the frequent full GC problem caused by this scenario had gone. And the performance of this out join was greatly improved.